🔱 Hydra EMU - High Performance Cluster

Hydra High-Performance Computing Cluster is a key computational resource of the Laboratory of Theoretical Astrophysics at the School of Arts, Sciences and Humanities, University of São Paulo. Funded by the FAPESP grant 2022/03972-2, Hydra supports high-end simulations in astrophysical research, including studies on turbulence, magnetic reconnection, and cosmic ray acceleration.

💻 System Overview

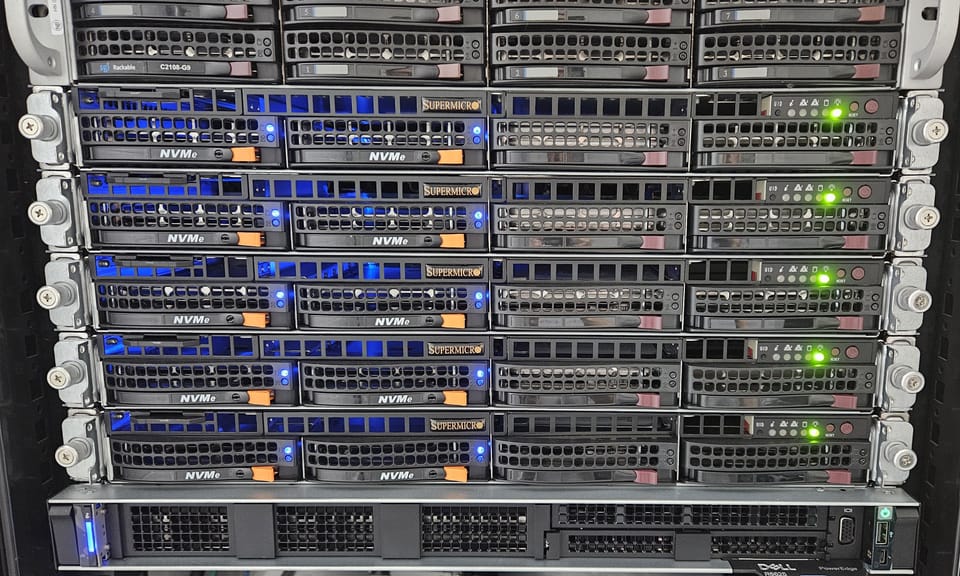

Hydra HPC is composed of:

- 5 compute nodes

- Each node has:

- 2× AMD EPYC 7662 (64-core) processors

- 512 GB RAM

- Total capacity: 640 CPU cores, 2.5 TB of RAM

- Storage: BeeGFS parallel file system

- Networking: 10 Gb Ethernet backbone

This architecture is tailored for massively parallel numerical simulations and intensive data workloads.

🔐 Requesting Access

Hydra HPC access is available to:

- Students and researchers affiliated with the Laboratory

- Approved external collaborators

To request access, please email grzegorz.kowal (at) usp.br with the following:

- Full name and institutional affiliation

- Brief project description

- Estimated computing needs (CPU hours, RAM, storage)

- Expected usage duration

All users must agree to the acceptable use policy before accessing the system.

🔑 Accessing the Cluster

Cluster access is provided via SSH. You will receive a login username and must upload an SSH public key.

Login address:lat.each.usp.br

Authentication is done using SSH keys. Two-factor authentication may be added in the future.

⚙️ Running Simulations

Hydra uses the SLURM scheduler.

Basic workflow:

- Prepare simulation input and job script

Monitor using:

squeue

sacct

Submit the job:

sbatch my_job_script.sh

Default policies:

- Fair-share job scheduling

- Max wall time: 48h

- Max cores per user: 128

Example job scripts are available upon request.

📄 Documentation & Help

- Internal documentation is available for module usage, job submission, and troubleshooting

Support contact:

📧 grzegorz.kowal (at) usp.br

📊 Monitoring and Modules

Cluster Dashboard

Hydra provides a real-time monitoring dashboard using Grafana:

📈 https://lat.each.usp.br:3000/

Users can track node usage, job activity, and system health metrics through this web interface.

In-Cluster JupyterLab

For direct, browser-based data analysis without needing to transfer data externally, users can access:

🧪 https://lat.each.usp.br:8000/

The JupyterLab environment runs on the cluster, allowing Python-based workflows, data visualization, and post-processing directly where the data resides. Access is granted after user account creation.

🧪 Installed Software

Some of the pre-installed tools include:

- OpenMPI

- GCC toolchain

Additional software can be installed by users or requested via the administrator.

🔁 Data Transfer

Use the following tools for data upload/download:

rsyncscpsftp

We recommend frequent backups of critical data to external storage systems.

🌐 Collaboration

Hydra HPC supports collaborative projects. Contact us for details on shared access, project folders, or institutional agreements.

📢 Acknowledgment

If your research using Hydra HPC leads to a publication, please acknowledge support as:

“The computations were performed using the Hydra HPC Cluster at the Laboratory of Theoretical Astrophysics, University of São Paulo, supported by FAPESP grant 2022/03972-2.”

✉️ Contact

For access, support, or collaboration inquiries:

📧 grzegorz.kowal (at) usp.br

🌐 lat.each.usp.br